Special Topic: Audio Production for Fulldome

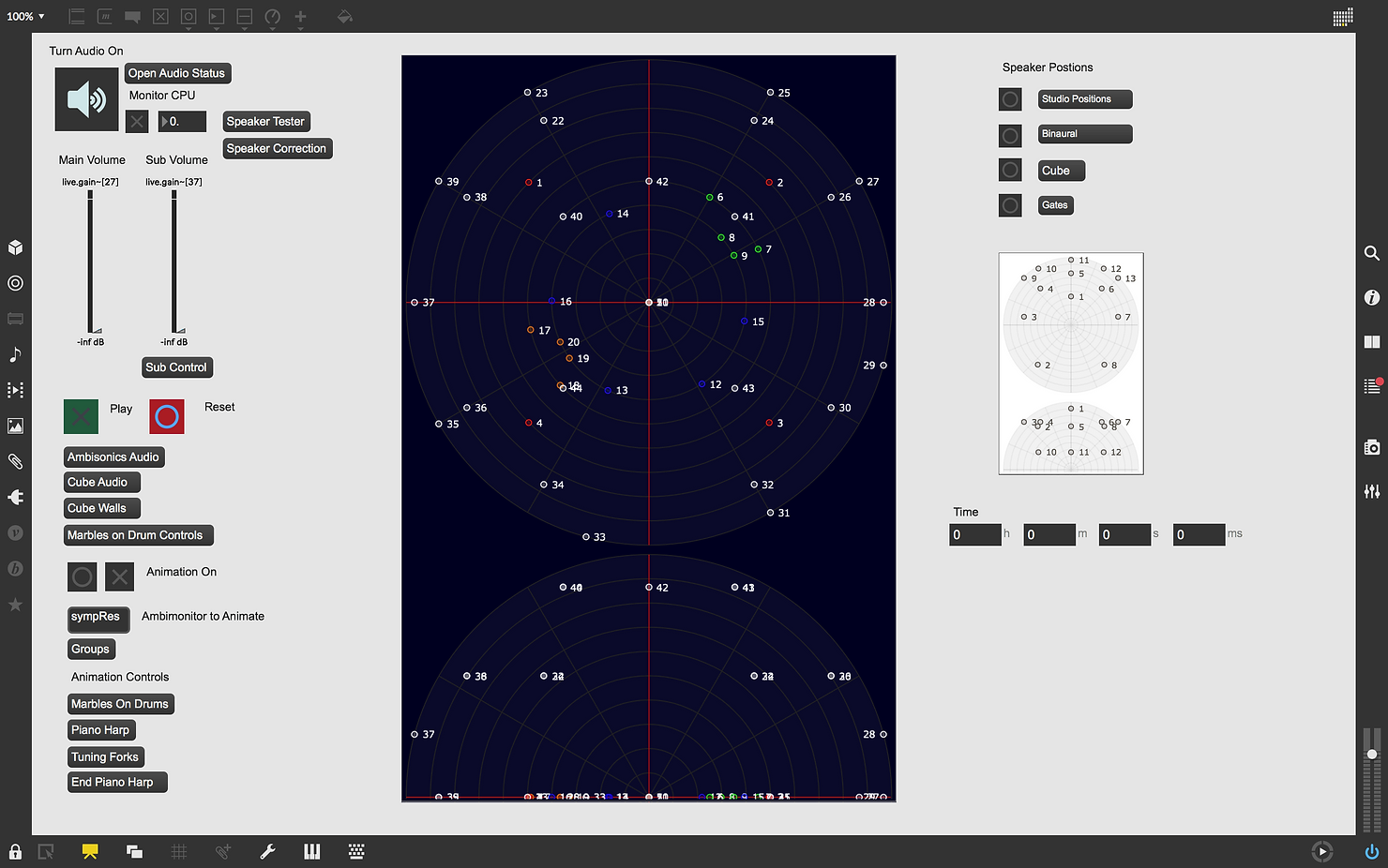

Seven years ago, I walked into the Gates Planetarium at the Denver Museum of Nature and Science with the opportunity to experiment with the planetarium’s audio/visual system. I had just graduated with my undergraduate degree in recording arts from the University of Colorado in Denver and suddenly found myself ‘playing’ with a 15.1 channel surround system (15 individual speakers arranged around the perimeter of the dome with one sub channel for the low frequency sounds). At that time, there were no tools available to interface a digital audio workstation to such a system, so we had to build our own. One of my previous audio engineering professors had organized a group of us to be a part of an artist and technology collective we called Signal-to-Noise Labs. This collective had a shared interest in technology, new media, and experimentation and we all had a desire to push the boundaries of what was previously possible. Building on our foundations in audio engineering and with the help of one of our more computer tech savvy members we dove into learning how to use the graphical programming environment Max MSP and within a month we were flying sound around in 3-dimensional space. From then on I was hooked.

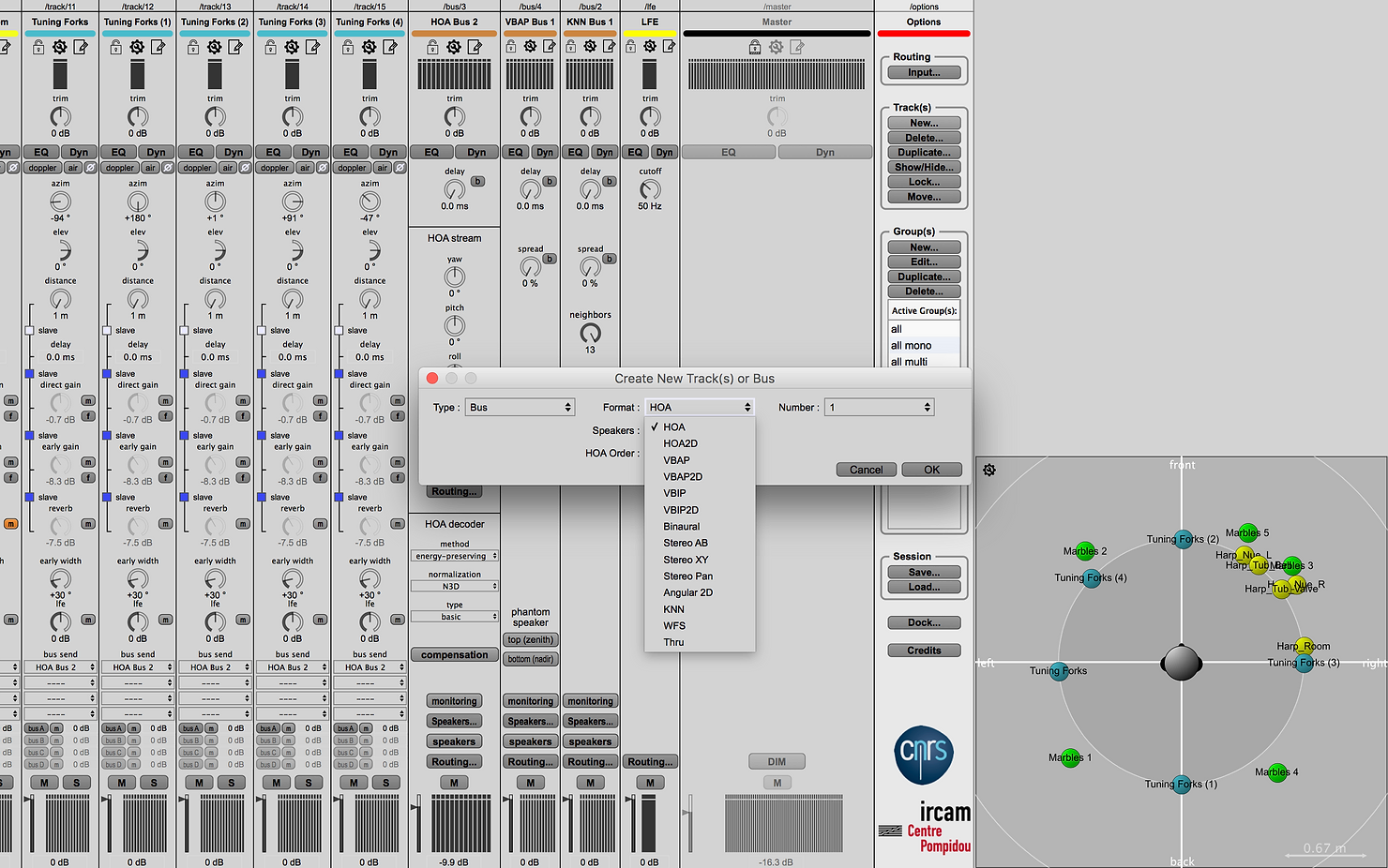

The tools we initially built were based on an algorithmic approach to audio spatialization called ambisonics. Ambisonics uses spherical harmonics to create a soundfield that can be decoded to variable speaker arrays. Surround sound, which has been the film industry standard for working with multichannel audio production to date, is heavily based on a specified speaker layout and a fairly specific acoustic environment. The way I think of the comparison of mixing for ambisonics vs. surround sound is that with typical surround sound you pan the sound from this speaker to that speaker, with ambisonics you pan the sound from here to there. When working with an unconventional speaker array, such as a 15.1 channel system, there is no easy way to translate a traditional surround sound mix to this environment; however, with ambisonics your mix is independent of the speaker array so it can move easily between different systems and accommodate for unusual layouts.

Ambisonics is not the only algorithm for approaching mixing from a more ‘spatial’ perspective. Some of the other ones are Vector Based Amplitude Panning (VBAP), Distance Based Amplitude Panning (DBAP), and K-Nearest Neighbor (KNN). All of them have their strengths and their weaknesses. As I have continued my research I have found that I like to use a combination of techniques to create spatial compositions. In my view, choosing between spatialization algorithms is like choosing between different microphones. They each have their own sound and their use is highly dependent on the experience and tone you are trying to capture and portray.

Currently, typical surround sound is the ‘standard’ in terms of production and workflow for fulldome environments. Due to the standardization of surround sound in the film production world, most audio software already has toolsets for mixing to surround sound and composers and sound designers are already familiar with working in this format. This is not the case with some of the other algorithmic methods for spatialization. However, the surround sound format can be incredibly limiting when working with fulldome environments.

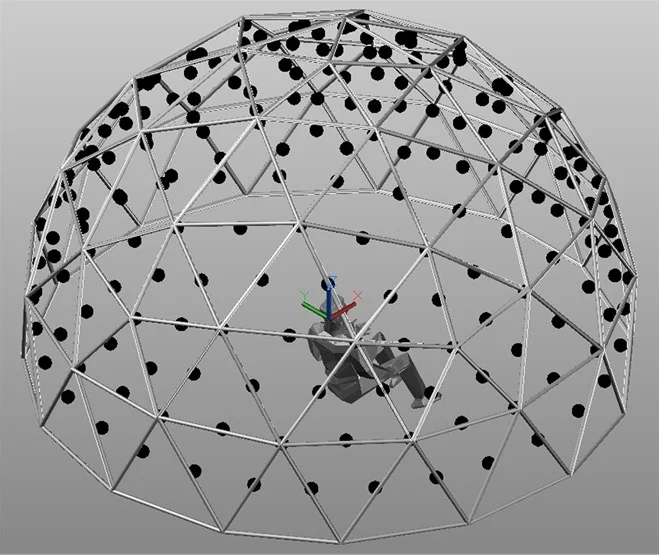

Surround sound typically forms a two-dimensional ring of speakers around the listener at ear level. If the sound system is designed that way for a dome the speakers would be located at the edges of the space and not where the image is being projected. Some spaces have the ring lifted so that the speakers are more ‘in the dome’. While this brings the speakers in line with the imagery the system is still limited in its abilities to create a sound image at the zenith of the dome, the central point for much of the visual content.

The new theatrical immersive sound formats that have been released for traditional cinema are Dolby Atmos and Auro 3D. The challenge with these setups in fulldome environments are that they have specified speaker arrangements, which are primarily rectangular, that are required for audio playback. There are no standards when it comes to designing an audio system for a fulldome environment. Every dome has differences in the playback systems, number and position of speakers, and acoustic treatment. Even differences in the shape of the dome and the material used can completely change how a mix will sound from one dome to the next. This makes using any of these more proprietary ‘standards’ that rely on specified speaker arrangements and acoustic environments nearly impossible to implement on a large scale.

By utilizing a spatial approach to working with audio in fulldome, facilities and content creators could have the opportunity to more easily adjust to different speaker positions and layouts. Also, most of these algorithms use spherical coordinate systems and more naturally lean towards having speakers arranged spherically. The natural shape of the dome is perfect for creating an ideal system for audio playback for these algorithms. Both the dome and the audio format offer the ability to mutually support the full potential of what is possible in these environments.

In the past few years, spurred by the rise in popularity of Virtual Reality, Augmented Reality, and Mixed Reality, immersive audio has become a ‘hot topic.’ Ambisonics used to be a specialized term that only a few select audio nerds would whisper about in chat rooms on the internet, now it is being talked about frequently within mainstream audio circles. Bigger names in the audio industry are producing tools like affordable ambisonics microphones, plugins for mixing and exporting ambisonics files, and new 3D audio standards, like MPEG-H, that will allow for transmission of 3D audio files between different systems. However, the field of immersive audio is still the wild west. Producers, content creators, and engineers are trying to figure out how to create efficient workflows that can be utilized in production timeframes using tools that weren’t necessarily built for their needs. New tools are coming out every day, but they’re not quite there yet. It’s an exciting time in the industry and I believe that many of these current developments will lead to better standards and easier workflows for fulldome environments. The tools are coming, they may still have some distance to go, but the fulldome industry has never been an industry shy of pushing the boundary of technical innovation. I strongly believe immersive audio is the future for sound in domes and I am excited to be a part of that future.